Optimizing our Delivery Process with Data Science

At Mercadoni we are changing how customers buy groceries in Latin America. Currently, we have partnered with more than 80% of the biggest retailers in Colombia, Mexico, and Argentina, giving us the possibility of delivering groceries from +200 different locations in Latam.

In a weekly basis, our +3k shoppers are buying and delivering +100k different products from our retailers all over Latam. Failing on doing this process on time implies not only missing a customer expectation but also delaying the following scheduled orders and messing up our operations.

The Challenge

As simple as our delivery process may seem, we were having a huge problem at the moment we used to transfer money to the debit cards of our shoppers when they were about to pay at the supermarket cashier: In about 40% of our daily orders, we were sending less money than the shopper needed.

This turned out in huge delays of our process since our shoppers had to wait for someone to manually transfer them the missing money (with thousands of orders per day, this process could take up to hours) and, on top of that, retailers were really pissed off since our shoppers were blocking their cashiers and their customers were unhappy about it.

The Root Cause

To give you guys some context, the nature of this problem derives from data inconsistencies between the time our customers make an order and the moment our shoppers pick the products:

- Price fluctuations: Prices vary daily in supermarkets, thus a price that you saw in our app may change with a given probability.

- Weight variations: Prices of products that are charged by weight such as fruits and vegetables are very difficult to predict since shoppers may choose approximate amounts of the products when picking them.

- Product changes: Customers may ask for special products that are not shown in the app or change products when the shopper is picking. The total at the cashier will be for sure different in these cases.

The First Attempt: A Fixed Percentage

The first solution that we tried was driven by our mythical entrepreneur common sense: A "quick win" fixed percentage increase to every money transfer. A simple excel analysis showed that a linear 25% increase would ensure 70% of the orders could be paid in the cashier…

However, as our provider charges us per any amount transfer to debit cards, this cost increased by more than 25% the month we did it. In addition, giving more money to our shoppers increased fraud. Some of them used to accumulate money in their debit cards, then they bought things for them at supermarkets, and then they disappeared.

At the end, the solution was generating more problems than the original problem.

A Data Science Approach

Frustrated by our common sense, we decided to use data science to solve this situation. To be honest, at the beginning it was difficult to convince the team. In startups like ours, we are obsessed with speed. And an exploratory data analysis with no clear deadlines is like Voldemort in this environment.

This article is the first of many that we want to publish to change this wrong belief. Data Science and Machine Learning should not be confused with complexity. On the contrary, it is a synonym of scalability and optimization. At the end, we solved the problem in less time, and with better results than expected.

1. A Mathematical Problem

The first thing we did was to define our problem in mathematical terms. Particularly, we can see it as an optimization problem where the objective value is the money we transfer. In other words, we must find a function in terms of several dimensions such as order price, number of products, retailer, etc. that minimizes the difference between the money we transferred and the real price of the order.

Optimization Goal:

min(|money_transferred - real_price|)

With the constraint that money_transferred ≥ real_price in at least 90% of the cases

2. Our Baseline

The second step was to define a baseline to compare our results. We took the data from the last month and calculated the percentage of orders that were paid with the money we transferred. We also calculated the average difference between the money we transferred and the real price of the order. These metrics would be our baseline to compare our results.

3. Exploratory Data Analysis

The third step was to explore our data to find patterns and correlations that could help us define the features of our model. We explored the data from different angles and found some interesting insights.

Retailer Type

We found that retailers that are specialized in fruits and vegetables registered more differences between our prediction and the real price of our orders. So, we defined the retailer type as one of the features.

Stock Update Frequency

We also discerned that retailers that update stock frequently in Mercadoni registered the least amount of differences between our prediction and the real price of our orders. Thus, an important feature for our model is the stock update frequency.

Product Availability

We also explored correlations between the orders in which our shoppers do not find some of the products customer ordered and the price difference of our predictions. The more they report products as not found or replace, the more variation we have.

Order Composition

The percentage of products charged by weight in an order was also a significant factor. Orders with a higher proportion of fruits, vegetables, and other weight-based items showed greater price variability.

Model Definition and Testing

The last part of this process is to create our data model. This is to implement a function that, depending on the features we defined in step 2, is going to predict an optimized value for the money we transfer. We also have to consider that this function is going to be used many times per day, even per minute. Thus, we chose to clean some of our features and implement a not so complex function using regressions.

In particular, we minimized the mean square error (MSE) with an extra constraint in mind: ensure that 90% of our estimation was above the real value.

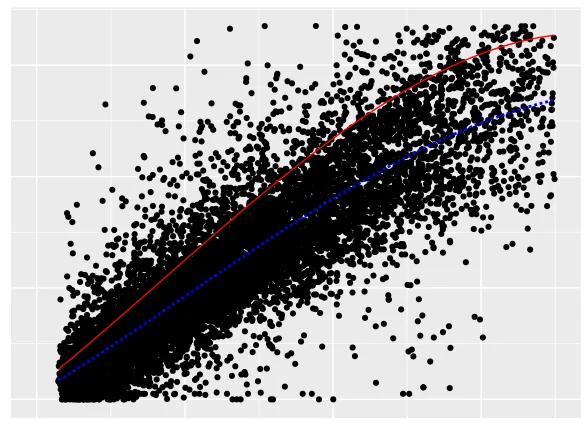

Quantile Regression: The Key Insight

But a simple linear regression wasn't enough. It will tend to centralize the error and we need to ensure 90% of the estimation to be above the value avoiding increasing fees in transaction. For this case in particular, quantile regression was the best solution because it ensures the estimation for a quintile.

As we tested, we saw that it slightly increase the MSE but it ensures that almost all of our shoppers will have their money on time. For those transactions we have doubts they will be assigned to manual support.

Results and Impact

The services had been up for almost 2 months. We achieved remarkable improvements:

- 70% reduction in our expenditure on transaction fees

- 80% reduction in the amount of manual transfers

- Significant decrease in cashier delays and customer complaints

- Improved relationships with retail partners due to smoother checkout process

Conclusions

The main things we learnt with this project were:

- Problem definition is crucial: With a good problem definition you will have key metrics to validate and a baseline

- Visualization reveals patterns: Data visualization allows you to test your hypothesis fast and build better features for your model

- Focus on business metrics: You are not seeking the best MSE or accuracy. You are seeking business metrics instead. Cost reduction, automation and process on time

- Keep it simple: Simple and fast data science service let you scale and validate at a good pace

Many other data science processes have to be leveraged with manual processes such as data labeling, and for that reason we are now launching a game that will help us labeling products and prices. We are just scratching the surface of Data Science. Come, join us and help us shape the future of groceries in Latam.

At Churnless AI, we apply similar data science approaches to solve complex business problems.

If you're facing challenges that could benefit from data-driven solutions, contact us to learn more.

Originally published on Medium